Series Parts

MICROSOFT WINDOWS 2012 R2 ISCSI TARGET STORAGE SERVER FOR ESXI AND HYPERV

POWERSHELL INSTALLING AND CONFIGURING MICROSOFT ISCSI TARGET SERVER

VMWARE ESXI CONFIGURE (VSWITCH) VMKERNEL NETWORK PORT FOR ISCSI STORAGE

POWERCLI: VMWARE ESXI CONFIGURE (VSWITCH) VMKERNEL NETWORK PORT FOR ISCSI STORAGE

VMWARE ESXI INSTALL AND CONFIGURE SOFTWARE ISCSI STORAGE ADAPTER FOR VMFS VERSION 6 DATASTORE

POWERCLI VMWARE: CONFIGURE SOFTWARE ISCSI STORAGE ADAPTER AND ADD VMFS DATASTORE

VMWARE VCENTER STORAGE MIGRATE/SVMOTION VM AND PORT BINDING MULTIPATHING TESTING

POWERCLI: VIRTUAL MACHINE STORAGE MIGRATE/SVMOTION AND DATASTORE PORT BINDING MULTIPATHING

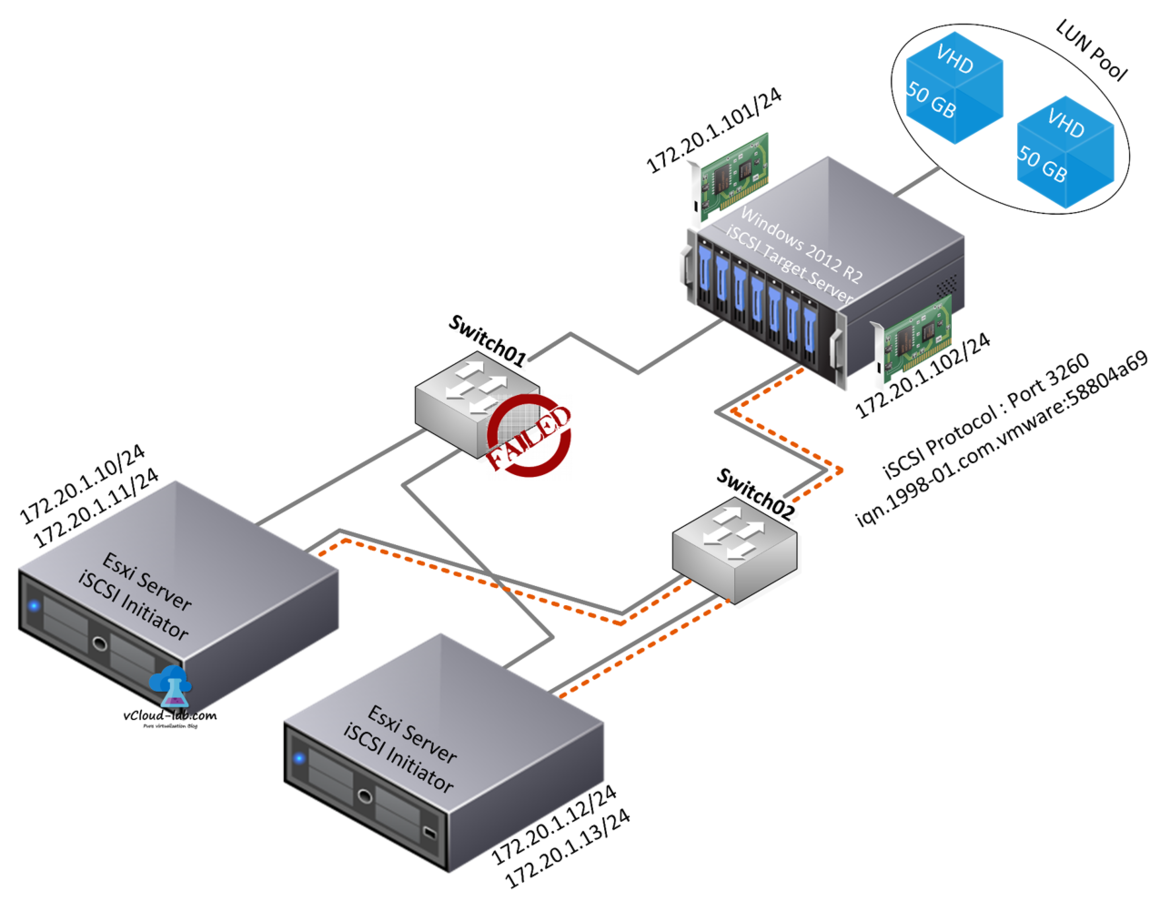

As per below diagram I have setup Microsoft Storage Target server and Esxi Networking VMKernel ports, This is last part of series and configuring ESXi software iSCSI adapter for adding storage, here I will be adding and enabling software iSCSI adapter. (It is a client and also called initiator), Same steps can be performed using VMware Powercli and it will be a subject of my next article.

Warning: Windows iSCSI is not listed on VMWare HCL as Esxi iSCSI datastore. I am using it as a demo purpose.

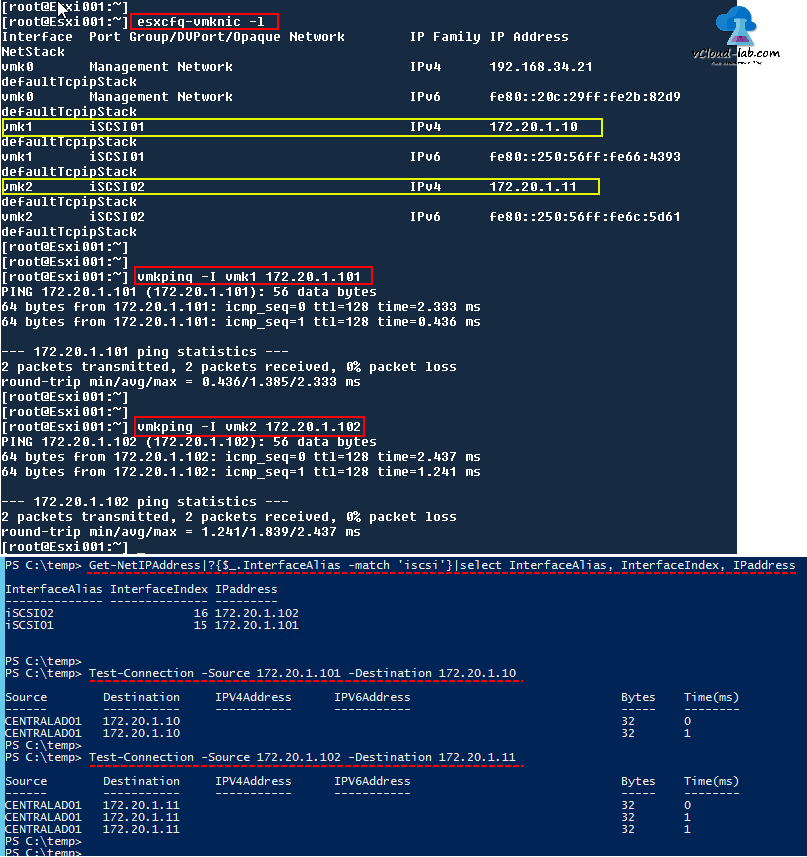

Make sure you have proper connectivity between Esxi iSCSI VMKernel adapters and Storage Target, I am checking connectivity on ESXi as well as Storage server by pinging each other. If SSH is not enabled on Esxi server go to Configure tab >> System Security Profile >> scroll down and Edit Services >> Select SSH >> Services Details on the status and start service. I am using putty tool to login to Esxi server. esxcfg-vmknic -l command lists all VMKernel adapter. I have highlighted both iSCSI vmkernel adapters, I specifically need their Interface names.

vmkping -I vmk1 172.20.1.101

vmkping -I vmk2 172.20.1.102

-I is Interface, vmkping will us selected interface only to ping remote IP. It looks good from Esxi Server that connectivity is good. Next do the same ping testing from Microsoft Storage Target server and try to reach Esxi server, using powershell command Test-Connection alternative to ping.

Test-Connection -Source 172.20.1.101 -Destination 172.20.1.10

Test-Connection -Source 172.20.1.102 -Destination 172.20.1.11

-Source is the interface on storage server and -Destination is Esxi server. Pinging is successful (if ping fails you will see error on the screen with red text). I can also check connectivity using telnet port 3260, If you are facing any issue check at networking for VLAN and MTU settings (remove them if required and check), Same steps are useful for first basic troubleshooting, Most of the time I have resolved my issues with the same steps.

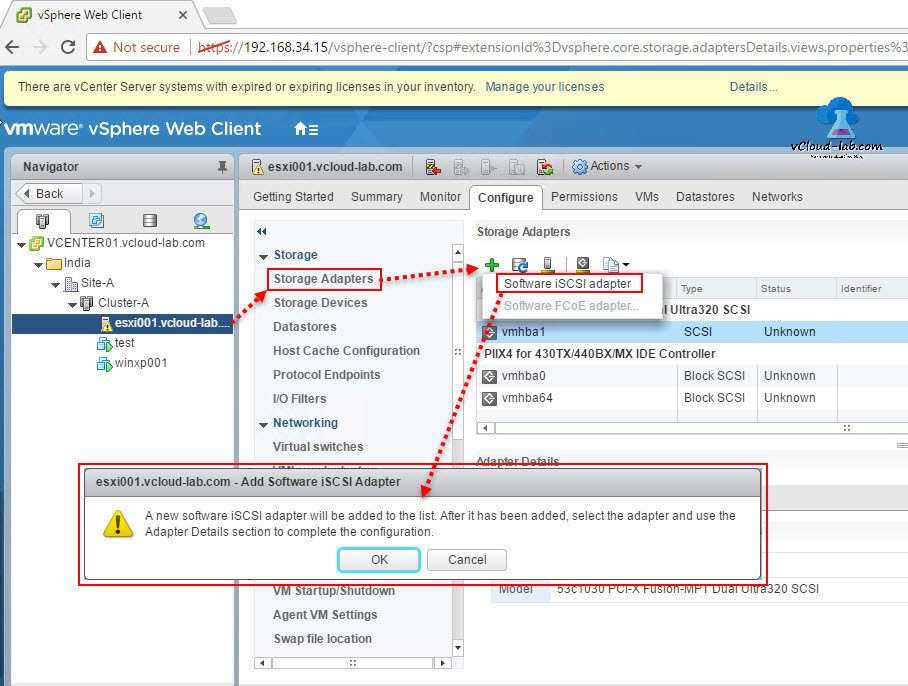

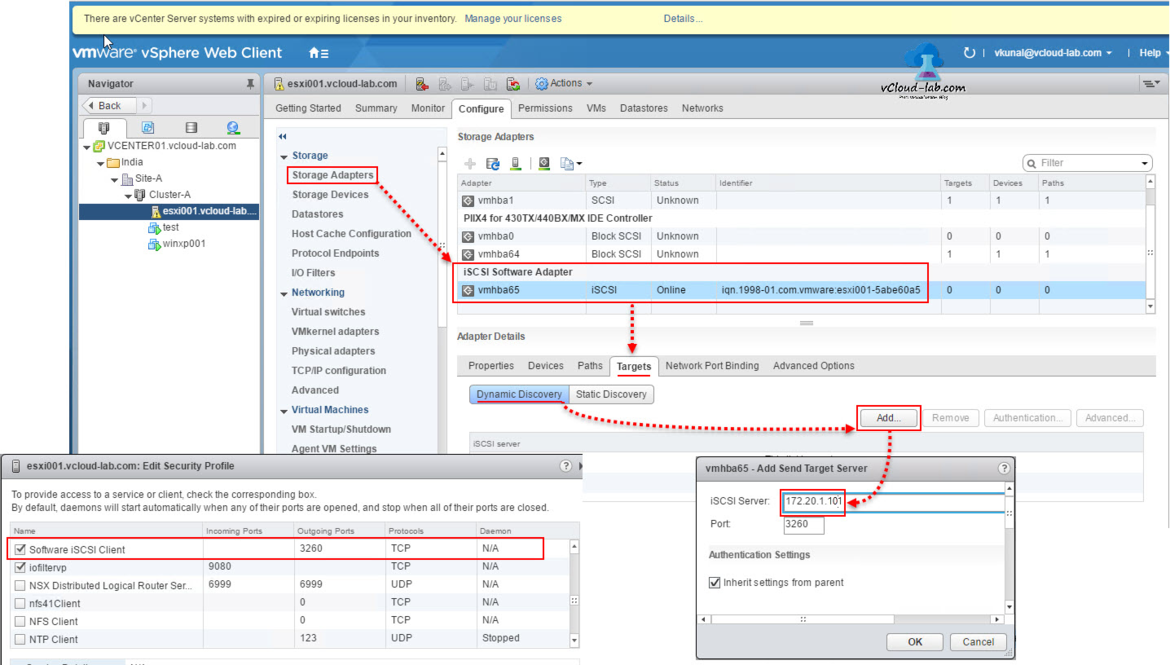

By default Software iSCSI adapter is disabled and not installed on the ESXi server, It can be added by selecting Esxi Server >> Configure Tab >> Storage >> Storage Adapters >> click + green plus sign >> Software iSCSI adapter.

It shows popup message A new software iSCSI adapter will be added to the list. after it has been added, select the adapter and use the adapter details section to complete the configuration, Make note here, only one Software iSCSI hba can be added on esxi server. also it will always have number above 35, in my scenario it is vmhba65.

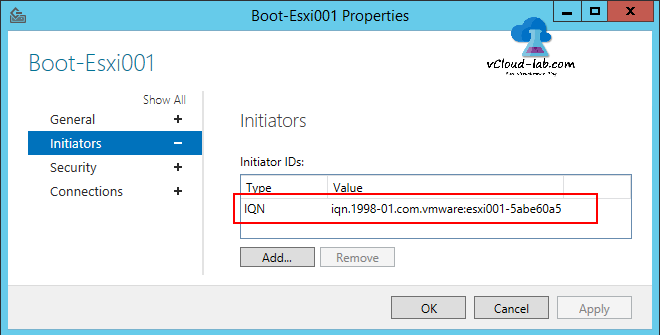

Here select vmhba65 adapter, note the type is iSCSI and status is online, I will require to add IQN identifier on Storage server for access. IQN stands for iSCSI Qualified name. It is somewhat as similar as physical address like MAC address and unique. Communication will taken care on 3260 port, by default when iSCSI software adapter is enabled or added it opens and allow port no 3260 in ESXi security profile automatically. Next I added this ESXi's IQN on Microsoft storage server. This table show how IQN name is made of.

| iqn.yyyy-mm.naming-authority:unique name -- iqn.1998-01.com.vmware:esxi001-5abe60a5 | |

| IQN (iSCSI qualified name) | Can be up to 255 characters long |

| yyyy-mm | is the year and month when the naming authority was established. |

| naming-authority | s usually reverse syntax of the Internet domain name of the naming authority. For example, the iscsi.vmware.com naming authority could have the iSCSI qualified name form of iqn.1998-01.com.vmware.iscsi. The name indicates that the vmware.com domain name was registered in January of 1998, and iscsi is a subdomain, maintained by vmware.com. |

| unique name | is any name you want to use, for example, the name of your host. The naming authority must make sure that any names assigned following the colon are unique |

| EUI (extended unique identifier) | Includes the eui. prefix, followed by the 16-character name. The name includes 24 bits for the company name assigned by the IEEE and 40 bits for a unique ID, such as a serial number. |

I am adding one Target (Storage Server) IP address in Dynamic Discovery (just need to add one of the IP and it will detect Targets IQN and other IPs, available paths automatically). Here it supports CHAP authentication, but for simplicity I am not touching it. Advanced options can be added or changed as per vendor recommendations and best practices documents.

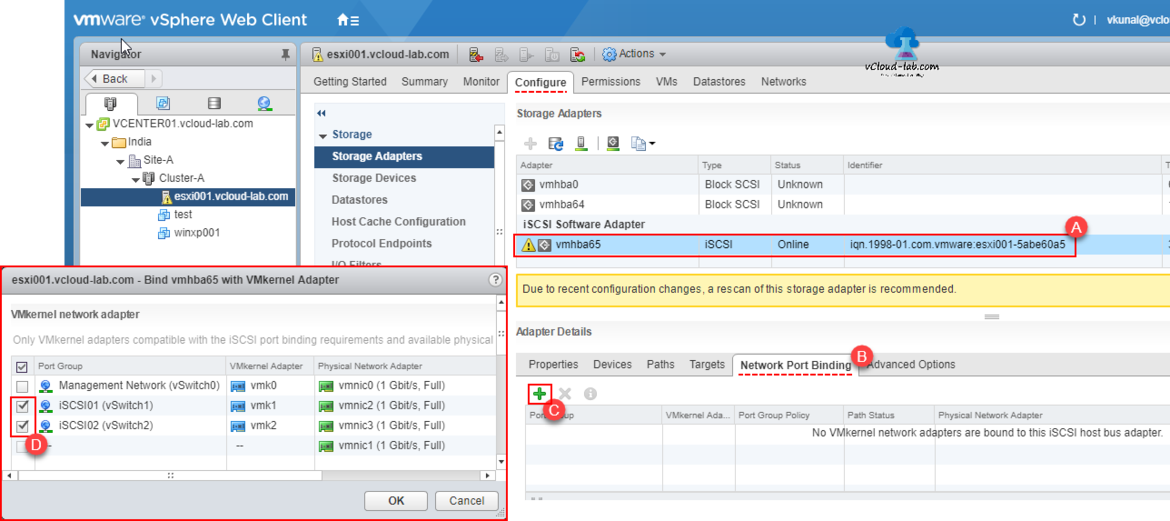

Next Configure storage network port binding. Port binding is used in iSCSI when multiple VMkernel ports for iSCSI reside in the same broadcast domain and IP subnet to allow multiple paths to an iSCSI array that broadcasts a single IP address. When using port binding, you must remember that:

- Array Target iSCSI ports must reside in the same broadcast domain and IP subnet as the VMkernel port.

- All VMkernel ports used for iSCSI connectivity must reside in the same broadcast domain and IP subnet.

- All VMkernel ports used for iSCSI connectivity must reside in the same vSwitch.

- Currently, port binding does not support network routing.

Here VMware has provided very nice article on storage network port binding, It is a must read article. Select network port binding tab in the bottom on iSCSI vmhba, click + green plus button. and select iSCSI network vmkernel adapters.

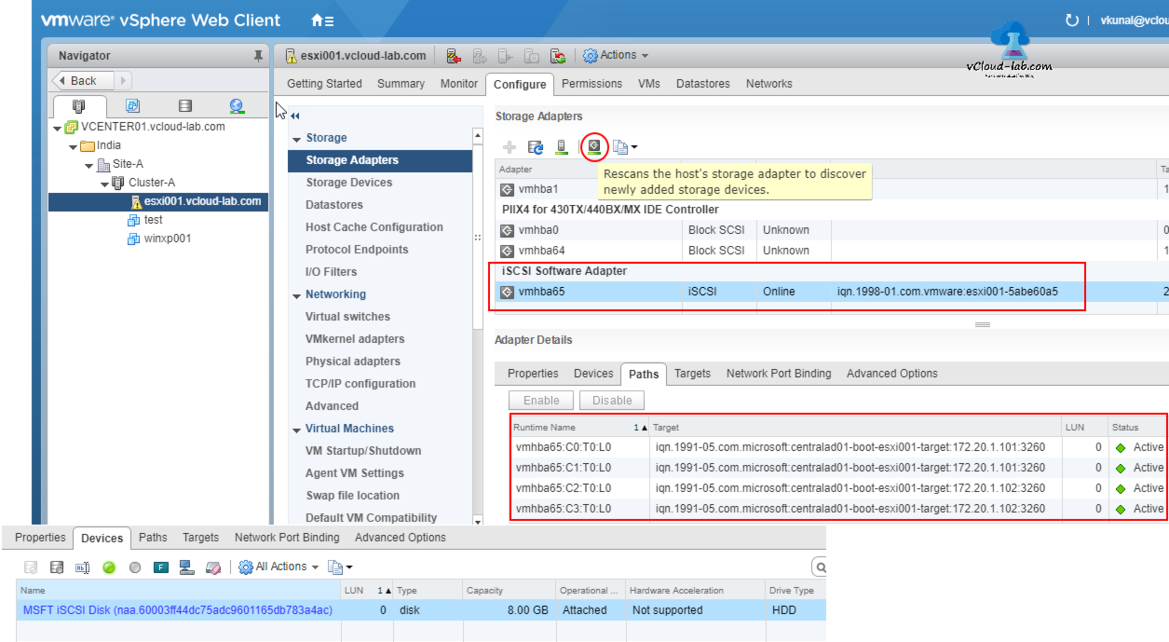

You will see warning, Due to recent configuration changes, a rescan of this storage adapter is recommended. So for next step click rescan button in the red circle. It Rescans the host's storage adapter to discover newly added storage devices, detects all provided remote LUNs. Check the paths tab, If calculated Two iSCSI dedicated NICs on esxi server and two NICs on storage total 4 paths available correctly.

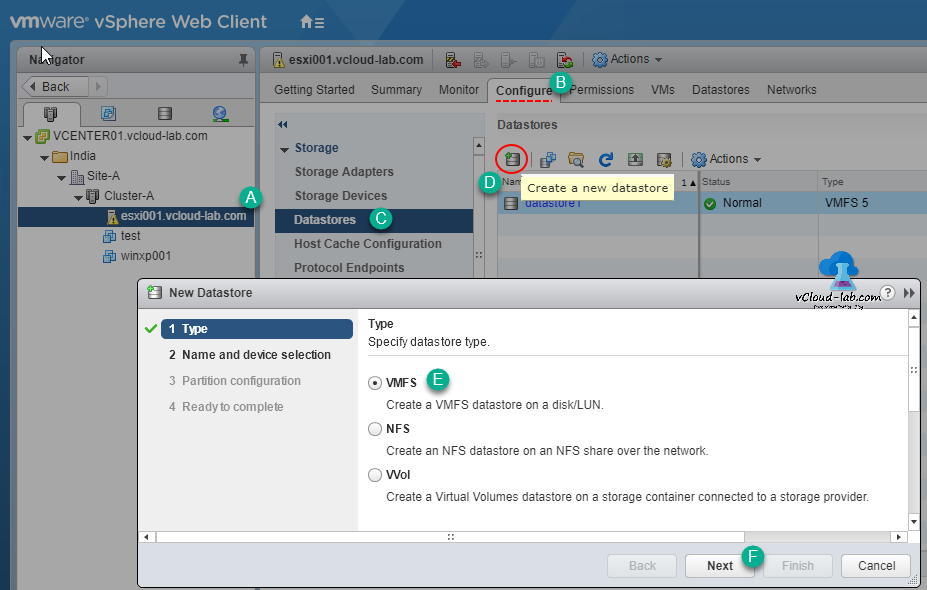

So far it all looks good to me. In the next step add and format assigned LUN as VMFS datastorage. To add remote storage disk/LUN on esxi go to configure tab, from Left pane expand Storage and click datastores, click the icon (red circled) to create a new datastore. New wizard opens, Select VMFS to create it and click next.

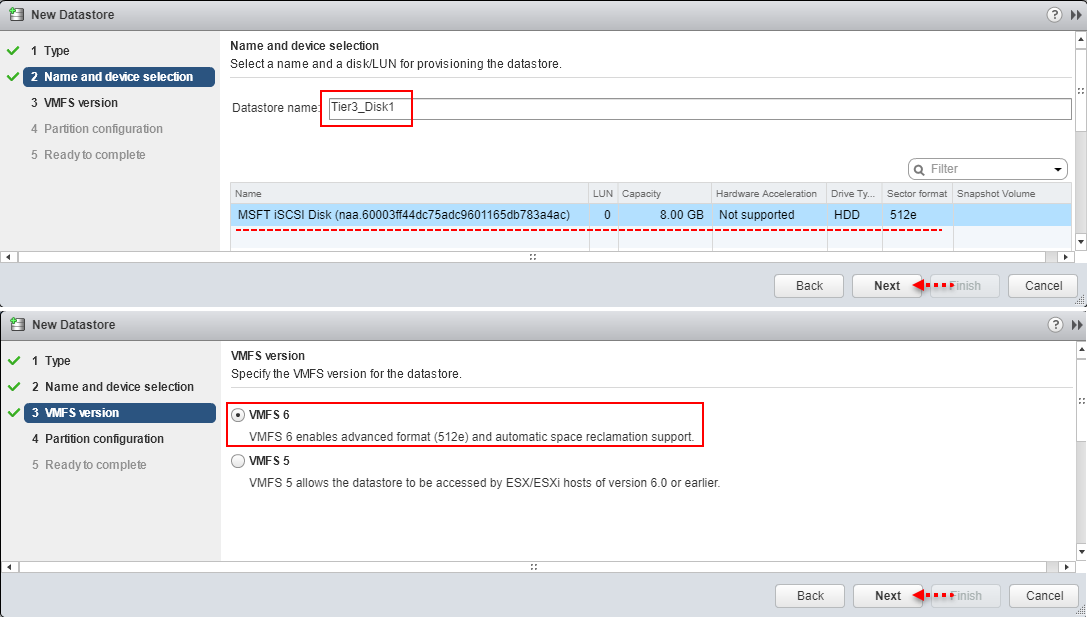

In the Name and device selection give datastore name, for best practices this name should always be matching with LUN disk on the Storage. You can use Name Naa id to correctly identify LUN disk selection, by practicing this you can avoid any accident like formatting incorrect LUN disk. In production specially with EMC devices I always ask storage team Datastore name and associated NAA id to identify disks and avoid any mis-configuration or mistakes. With the name I can view information like LUN Number, Capacity, Hardware acceleration, Drive type (HDD or SSD) and new sector format.

NAA stands for Network Addressing Authority identifier. EUI stands for Extended Unique Identifier. The number is guaranteed to be unique to that LUN. The NAA or EUI identifier is the preferred method of identifying LUNs and the number is generated by the storage device.

Next is VMFS Version as my all future hosts version going to be above 6.5, I am choosing VMFS 6 version, with this I get below feature.

- Support for 4K Native Drives in 512e mode

- SE Sparse Default

- Automatic Space Reclamation (UNMAP)

- Support for 512 devices and 2000 paths (versus 256 and 1024 in the previous versions)

- CBRC aka View Storage Accelerator

Make decision appropriately before going for VMFS 6 as it is not compatible with earlier version of esxi and host might not be able to access the datastores. Unfortunately it is not possible to perform an in-place upgrade from VMFS 5 to VMFS 6. To upgrade, you have to perform the following steps:

- Unmount the datastore from all ESX hosts.

- Delete the datastore formatted with VMFS 5.

- Create a new datastore with the VMFS 6 file system using the same LUN.

Depending on your environment and how much space you have available on your array, this can be a long and painful migration.

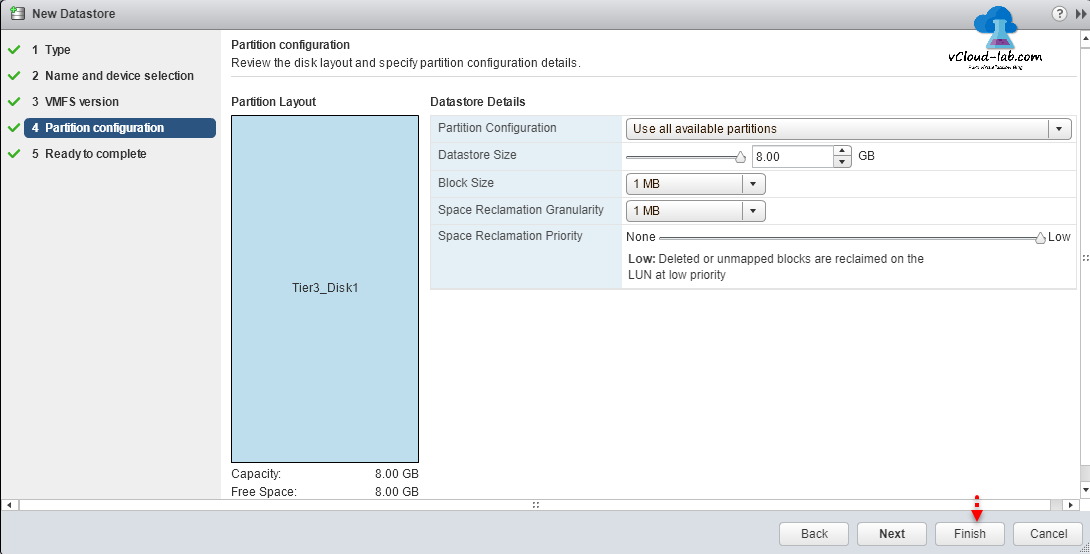

Next screen is Partition configuration, I am using all available partition and whole datastore size, Block size is by default 1 MB, Specify granularity for the unmap operation. Unmap granularity equals the block size, which is 1 MB. Storage sectors of the size smaller than 1 MB are not reclaimed. There are two options for space reclamation policy. Low (default), Processes the unmap operations at a low rate. Select None option if you want to disable the space reclamation operations for the datastore, Reclamation is also possible using commands. If everything looks good review the changes in Ready to complete and click finish.

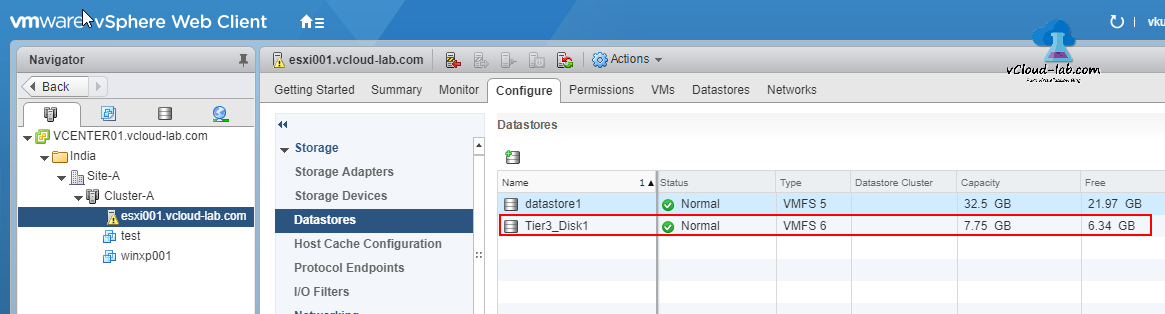

Here my datastore is ready to deploy VMs. It is always a best practice to test the configured item so I will be demonstrating and removing cable from one of the physical NIC adapter to simulate switch or network card failure and will watch how storage multiple path effects to see what happens to VM on the datastore.

If you add another Esxi host adding datastore is not required, This formatted datastore will be automatically be visible once configuring VMKernel ports and iSCSI software adapter. add datastore need to run once only for newly presented storage disk, also once LUN is presented from storage to Esxi always to detect it correctly rescan adapter as well as vmfs.