If you’re using containers, there’s a great possibility you’ve heard of Kubernetes, or K8s (in short). In straightforward language, Kubernetes is an open-source system for orchestrating, automating deployment, scaling, and management of containerized applications. Kubernetes is a tool intended to get your life to lot simpler. Once your infrastructure grows larger and becomes complicated your applications to develop; further Kubernetes is helpful.

This is in-detailed step by step guide to install kubernetes cluster on Ubuntu OS (linux). There are master node and worker node in the kubernetes architecture, Here I am installing my first master node - control-plane (with roles apiserver, controller manager, scheduler, etcd database).

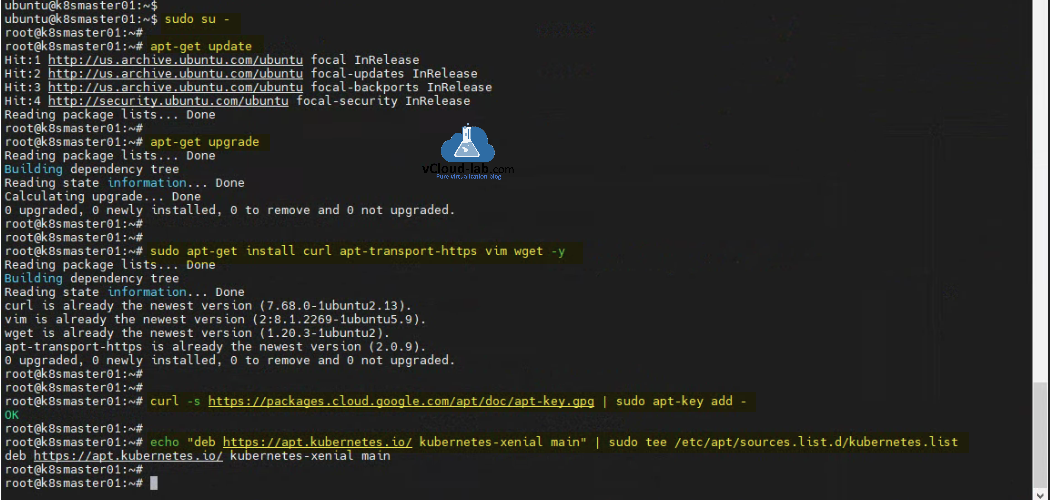

I have my ubuntu servers ready installed, I have assigned static IP and hostname (k8smaster01) to first node. I am running sudo su - to get root access so I don't have to type sudo in front of every command. Do the OS update and upgrade with apt-get update and apt-get upgrade. Install the required application for first phase sudo apt-get install curl apt-transport-https vim wget -y. Package apt-transport-https enables working with http and https in Ubuntu’s repositories, package curl and wget helps to browse and download from internet. Package vim is a text editor.

Command curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - adds kubernetes singing key to Ubuntu node. Add kubernetes repository to ubuntu apt-get package source using echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list.

ubuntu@k8smaster01:~$ ubuntu@k8smaster01:~$ sudo su - root@k8smaster01:~# root@k8smaster01:~# apt-get update Hit:1 http://us.archive.ubuntu.com/ubuntu focal InRelease Hit:2 http://us.archive.ubuntu.com/ubuntu focal-updates InRelease Hit:3 http://us.archive.ubuntu.com/ubuntu focal-backports InRelease Hit:4 http://security.ubuntu.com/ubuntu focal-security InRelease Reading package lists... Done root@k8smaster01:~# root@k8smaster01:~# apt-get upgrade Reading package lists... Done Building dependency tree Reading state information... Done Calculating upgrade... Done 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded. root@k8smaster01:~# root@k8smaster01:~# sudo apt-get install curl apt-transport-https vim wget -y Reading package lists... Done Building dependency tree Reading state information... Done curl is already the newest version (7.68.0-1ubuntu2.13). vim is already the newest version (2:8.1.2269-1ubuntu5.9). wget is already the newest version (1.20.3-1ubuntu2). apt-transport-https is already the newest version (2.0.9). 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded. root@k8smaster01:~# root@k8smaster01:~# root@k8smaster01:~# curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - OK root@k8smaster01:~# root@k8smaster01:~# echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list deb https://apt.kubernetes.io/ kubernetes-xenial main root@k8smaster01:~#

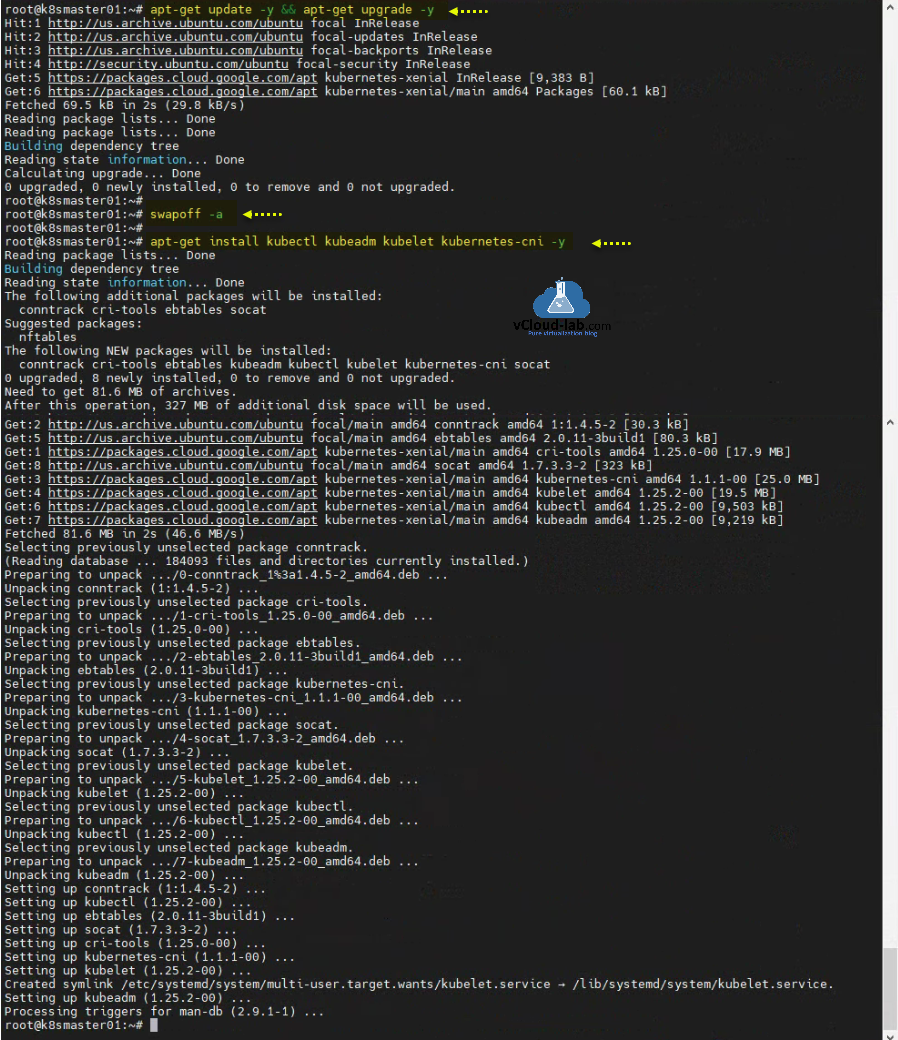

I have added kubernetes online repository in the apt package source, update the repository with command apt-get update -y. Disable swap memory in os kubernetes don't like where swap is enabled, use command swapoff -a.

Install kubernetes application components apt-get install kubectl kubeadm kubelet kubernetes-cni -y.

kubectl: Command line tool to connect kubernetes cluster and manage.

kubeadm: Using this command you create and initialize kubernetes cluster

kubelet: It is a agent runs on all nodes and handles communication between nodes (runtime).

kubernetes-cni: Enables networking between containers.

root@k8smaster01:~# apt-get update -y && apt-get upgrade -y Hit:1 http://us.archive.ubuntu.com/ubuntu focal InRelease Hit:2 http://us.archive.ubuntu.com/ubuntu focal-updates InRelease Hit:3 http://us.archive.ubuntu.com/ubuntu focal-backports InRelease Hit:4 http://security.ubuntu.com/ubuntu focal-security InRelease Get:5 https://packages.cloud.google.com/apt kubernetes-xenial InRelease [9,383 B] Get:6 https://packages.cloud.google.com/apt kubernetes-xenial/main amd64 Packages [60.1 kB] Fetched 69.5 kB in 2s (29.8 kB/s) Reading package lists... Done Reading package lists... Done Building dependency tree Reading state information... Done Calculating upgrade... Done 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded. root@k8smaster01:~# root@k8smaster01:~# swapoff -a root@k8smaster01:~# root@k8smaster01:~# apt-get install kubectl kubeadm kubelet kubernetes-cni -y Reading package lists... Done Building dependency tree Reading state information... Done The following additional packages will be installed: conntrack cri-tools ebtables socat Suggested packages: nftables The following NEW packages will be installed: conntrack cri-tools ebtables kubeadm kubectl kubelet kubernetes-cni socat 0 upgraded, 8 newly installed, 0 to remove and 0 not upgraded. Need to get 81.6 MB of archives. After this operation, 327 MB of additional disk space will be used. Get:2 http://us.archive.ubuntu.com/ubuntu focal/main amd64 conntrack amd64 1:1.4.5-2 [30.3 kB] Get:5 http://us.archive.ubuntu.com/ubuntu focal/main amd64 ebtables amd64 2.0.11-3build1 [80.3 kB] Get:1 https://packages.cloud.google.com/apt kubernetes-xenial/main amd64 cri-tools amd64 1.25.0-00 [17.9 MB] Get:8 http://us.archive.ubuntu.com/ubuntu focal/main amd64 socat amd64 1.7.3.3-2 [323 kB] Get:3 https://packages.cloud.google.com/apt kubernetes-xenial/main amd64 kubernetes-cni amd64 1.1.1-00 [25.0 MB] Get:4 https://packages.cloud.google.com/apt kubernetes-xenial/main amd64 kubelet amd64 1.25.2-00 [19.5 MB] Get:6 https://packages.cloud.google.com/apt kubernetes-xenial/main amd64 kubectl amd64 1.25.2-00 [9,503 kB] Get:7 https://packages.cloud.google.com/apt kubernetes-xenial/main amd64 kubeadm amd64 1.25.2-00 [9,219 kB] Fetched 81.6 MB in 2s (46.6 MB/s) Selecting previously unselected package conntrack. (Reading database ... 184093 files and directories currently installed.) Preparing to unpack .../0-conntrack_1%3a1.4.5-2_amd64.deb ... Unpacking conntrack (1:1.4.5-2) ... Selecting previously unselected package cri-tools. Preparing to unpack .../1-cri-tools_1.25.0-00_amd64.deb ... Unpacking cri-tools (1.25.0-00) ... Selecting previously unselected package ebtables. Preparing to unpack .../2-ebtables_2.0.11-3build1_amd64.deb ... Unpacking ebtables (2.0.11-3build1) ... Selecting previously unselected package kubernetes-cni. Preparing to unpack .../3-kubernetes-cni_1.1.1-00_amd64.deb ... Unpacking kubernetes-cni (1.1.1-00) ... Selecting previously unselected package socat. Preparing to unpack .../4-socat_1.7.3.3-2_amd64.deb ... Unpacking socat (1.7.3.3-2) ... Selecting previously unselected package kubelet. Preparing to unpack .../5-kubelet_1.25.2-00_amd64.deb ... Unpacking kubelet (1.25.2-00) ... Selecting previously unselected package kubectl. Preparing to unpack .../6-kubectl_1.25.2-00_amd64.deb ... Unpacking kubectl (1.25.2-00) ... Selecting previously unselected package kubeadm. Preparing to unpack .../7-kubeadm_1.25.2-00_amd64.deb ... Unpacking kubeadm (1.25.2-00) ... Setting up conntrack (1:1.4.5-2) ... Setting up kubectl (1.25.2-00) ... Setting up ebtables (2.0.11-3build1) ... Setting up socat (1.7.3.3-2) ... Setting up cri-tools (1.25.0-00) ... Setting up kubernetes-cni (1.1.1-00) ... Setting up kubelet (1.25.2-00) ... Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /lib/systemd/system/kubelet.service. Setting up kubeadm (1.25.2-00) ... Processing triggers for man-db (2.9.1-1) ... root@k8smaster01:~#

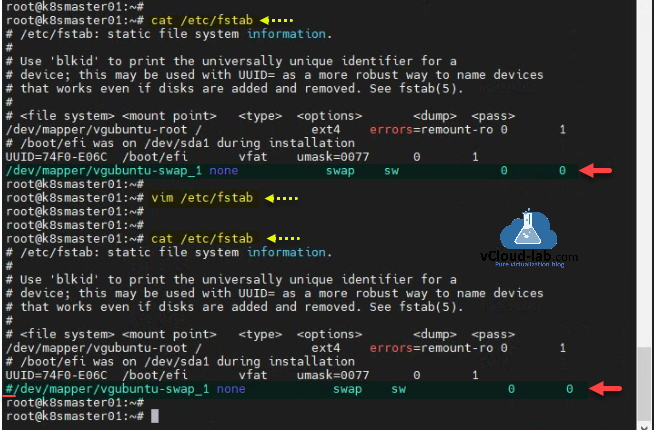

Disable swap using vim /etc/fstab with this one more step and comment out the line where swap name contains.

root@k8smaster01:~# vim /etc/fstab root@k8smaster01:~# root@k8smaster01:~# root@k8smaster01:~# cat /etc/fstab # /etc/fstab: static file system information. # # Use 'blkid' to print the universally unique identifier for a # device; this may be used with UUID= as a more robust way to name devices # that works even if disks are added and removed. See fstab(5). # # <file system> <mount point> <type> <options> <dump> <pass> /dev/mapper/vgubuntu-root / ext4 errors=remount-ro 0 1 # /boot/efi was on /dev/sda1 during installation UUID=74F0-E06C /boot/efi vfat umask=0077 0 1 #/dev/mapper/vgubuntu-swap_1 none swap sw 0 0 root@k8smaster01:~#

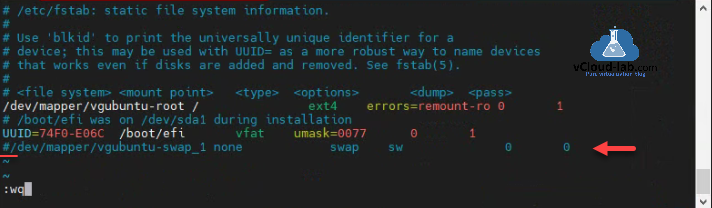

This is how the file looks inside the vim editor after disabling swap.

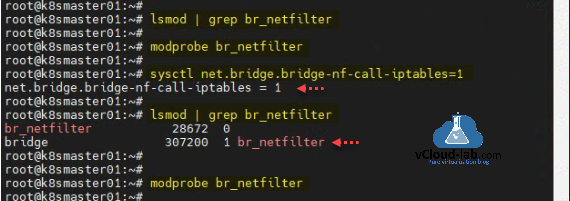

Ensure bridged network can be viewed by master and worker nodes. Check the status and see required module is loaded, use commands lsmod | grep br_netfilter and modprobe br_netfilter. To enable it command is sysctl net.bridge.bridge-nf-call-iptables=1.

root@k8smaster01:~# root@k8smaster01:~# lsmod | grep br_netfilter root@k8smaster01:~# root@k8smaster01:~# modprobe br_netfilter root@k8smaster01:~# root@k8smaster01:~# sysctl net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-iptables = 1 root@k8smaster01:~# root@k8smaster01:~# lsmod | grep br_netfilter br_netfilter 28672 0 bridge 307200 1 br_netfilter root@k8smaster01:~# root@k8smaster01:~# modprobe br_netfilter root@k8smaster01:~#

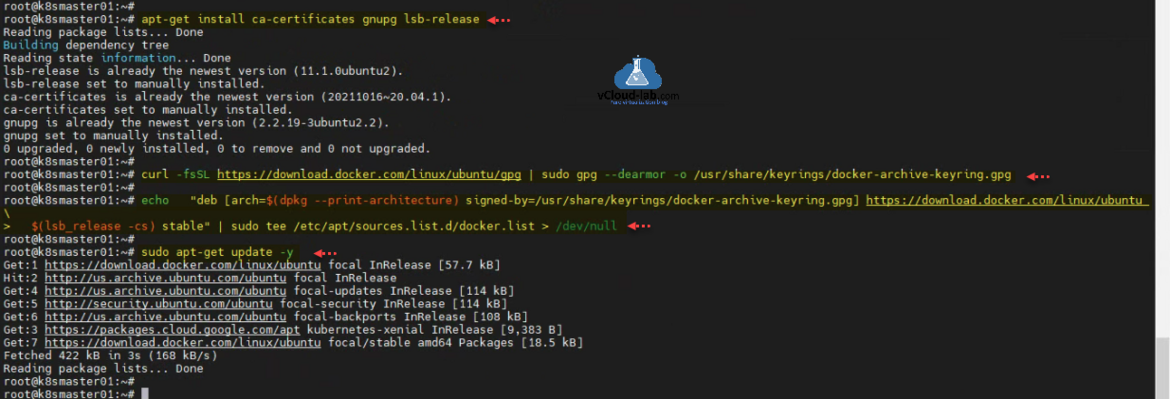

Now prepare node to install Docker runtime on the ubuntu system. Install supporting packages using apt-get install ca-certificates gnupg lsb-release. Package ca-certificates is a digital certificate that is used to verify the identity of 3rd parties, and encrypt data between you and said 3rd party. Package gnupg allows you to encrypt and sign your data and communications; it features a versatile key management system, along with access modules for all kinds of public key directories. The lsb_release command prints certain LSB (Linux Standard Base) and Distribution information.

Add docker signing key to ubuntu node using curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg. Add docker package repository inside ubuntu apt-get repo with command echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null. Update apt-get package source repository apt-get update -y. I see repos are added.

root@k8smaster01:~# root@k8smaster01:~# apt-get install ca-certificates gnupg lsb-release Reading package lists... Done Building dependency tree Reading state information... Done lsb-release is already the newest version (11.1.0ubuntu2). lsb-release set to manually installed. ca-certificates is already the newest version (20211016~20.04.1). ca-certificates set to manually installed. gnupg is already the newest version (2.2.19-3ubuntu2.2). gnupg set to manually installed. 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded. root@k8smaster01:~# root@k8smaster01:~# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg root@k8smaster01:~# root@k8smaster01:~# echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu \ > $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null root@k8smaster01:~# root@k8smaster01:~# sudo apt-get update -y Get:1 https://download.docker.com/linux/ubuntu focal InRelease [57.7 kB] Hit:2 http://us.archive.ubuntu.com/ubuntu focal InRelease Get:4 http://us.archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB] Get:5 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB] Get:6 http://us.archive.ubuntu.com/ubuntu focal-backports InRelease [108 kB] Get:3 https://packages.cloud.google.com/apt kubernetes-xenial InRelease [9,383 B] Get:7 https://download.docker.com/linux/ubuntu focal/stable amd64 Packages [18.5 kB] Fetched 422 kB in 3s (168 kB/s) Reading package lists... Done root@k8smaster01:~#

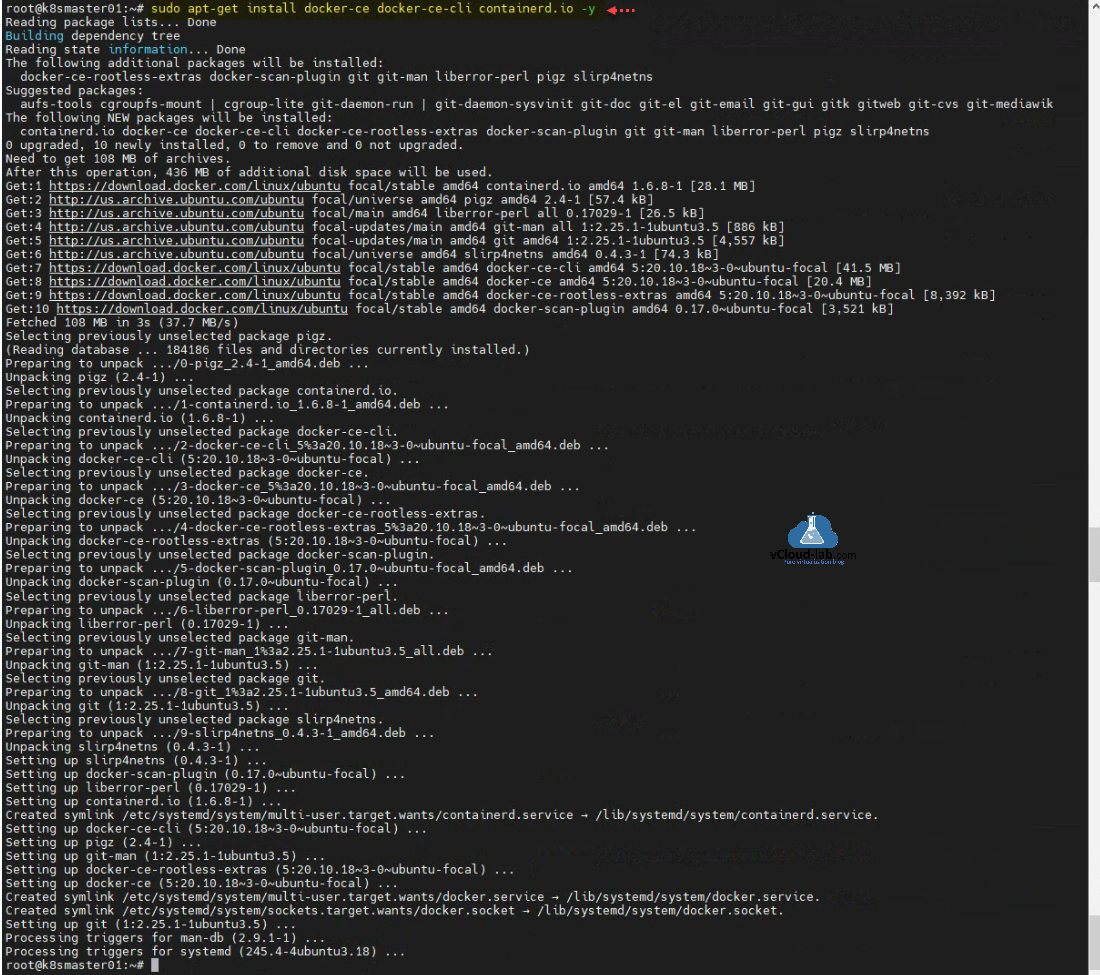

Install Docker/Containerd runtime with sudo apt-get install docker-ce docker-ce-cli containerd.io -y. Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications.

root@k8smaster01:~# sudo apt-get install docker-ce docker-ce-cli containerd.io -y

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

docker-ce-rootless-extras docker-scan-plugin git git-man liberror-perl pigz slirp4netns

Suggested packages:

aufs-tools cgroupfs-mount | cgroup-lite git-daemon-run | git-daemon-sysvinit git-doc git-el git-email git-gui gitk gitweb git-cvs git-mediawiki git-svn

The following NEW packages will be installed:

containerd.io docker-ce docker-ce-cli docker-ce-rootless-extras docker-scan-plugin git git-man liberror-perl pigz slirp4netns

0 upgraded, 10 newly installed, 0 to remove and 0 not upgraded.

Need to get 108 MB of archives.

After this operation, 436 MB of additional disk space will be used.

Get:1 https://download.docker.com/linux/ubuntu focal/stable amd64 containerd.io amd64 1.6.8-1 [28.1 MB]

Get:2 http://us.archive.ubuntu.com/ubuntu focal/universe amd64 pigz amd64 2.4-1 [57.4 kB]

Get:3 http://us.archive.ubuntu.com/ubuntu focal/main amd64 liberror-perl all 0.17029-1 [26.5 kB]

Get:4 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 git-man all 1:2.25.1-1ubuntu3.5 [886 kB]

Get:5 http://us.archive.ubuntu.com/ubuntu focal-updates/main amd64 git amd64 1:2.25.1-1ubuntu3.5 [4,557 kB]

Get:6 http://us.archive.ubuntu.com/ubuntu focal/universe amd64 slirp4netns amd64 0.4.3-1 [74.3 kB]

Get:7 https://download.docker.com/linux/ubuntu focal/stable amd64 docker-ce-cli amd64 5:20.10.18~3-0~ubuntu-focal [41.5 MB]

Get:8 https://download.docker.com/linux/ubuntu focal/stable amd64 docker-ce amd64 5:20.10.18~3-0~ubuntu-focal [20.4 MB]

Get:9 https://download.docker.com/linux/ubuntu focal/stable amd64 docker-ce-rootless-extras amd64 5:20.10.18~3-0~ubuntu-focal [8,392 kB]

Get:10 https://download.docker.com/linux/ubuntu focal/stable amd64 docker-scan-plugin amd64 0.17.0~ubuntu-focal [3,521 kB]

Fetched 108 MB in 3s (37.7 MB/s)

Selecting previously unselected package pigz.

(Reading database ... 184186 files and directories currently installed.)

Preparing to unpack .../0-pigz_2.4-1_amd64.deb ...

Unpacking pigz (2.4-1) ...

Selecting previously unselected package containerd.io.

Preparing to unpack .../1-containerd.io_1.6.8-1_amd64.deb ...

Unpacking containerd.io (1.6.8-1) ...

Selecting previously unselected package docker-ce-cli.

Preparing to unpack .../2-docker-ce-cli_5%3a20.10.18~3-0~ubuntu-focal_amd64.deb ...

Unpacking docker-ce-cli (5:20.10.18~3-0~ubuntu-focal) ...

Selecting previously unselected package docker-ce.

Preparing to unpack .../3-docker-ce_5%3a20.10.18~3-0~ubuntu-focal_amd64.deb ...

Unpacking docker-ce (5:20.10.18~3-0~ubuntu-focal) ...

Selecting previously unselected package docker-ce-rootless-extras.

Preparing to unpack .../4-docker-ce-rootless-extras_5%3a20.10.18~3-0~ubuntu-focal_amd64.deb ...

Unpacking docker-ce-rootless-extras (5:20.10.18~3-0~ubuntu-focal) ...

Selecting previously unselected package docker-scan-plugin.

Preparing to unpack .../5-docker-scan-plugin_0.17.0~ubuntu-focal_amd64.deb ...

Unpacking docker-scan-plugin (0.17.0~ubuntu-focal) ...

Selecting previously unselected package liberror-perl.

Preparing to unpack .../6-liberror-perl_0.17029-1_all.deb ...

Unpacking liberror-perl (0.17029-1) ...

Selecting previously unselected package git-man.

Preparing to unpack .../7-git-man_1%3a2.25.1-1ubuntu3.5_all.deb ...

Unpacking git-man (1:2.25.1-1ubuntu3.5) ...

Selecting previously unselected package git.

Preparing to unpack .../8-git_1%3a2.25.1-1ubuntu3.5_amd64.deb ...

Unpacking git (1:2.25.1-1ubuntu3.5) ...

Selecting previously unselected package slirp4netns.

Preparing to unpack .../9-slirp4netns_0.4.3-1_amd64.deb ...

Unpacking slirp4netns (0.4.3-1) ...

Setting up slirp4netns (0.4.3-1) ...

Setting up docker-scan-plugin (0.17.0~ubuntu-focal) ...

Setting up liberror-perl (0.17029-1) ...

Setting up containerd.io (1.6.8-1) ...

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /lib/systemd/system/containerd.service.

Setting up docker-ce-cli (5:20.10.18~3-0~ubuntu-focal) ...

Setting up pigz (2.4-1) ...

Setting up git-man (1:2.25.1-1ubuntu3.5) ...

Setting up docker-ce-rootless-extras (5:20.10.18~3-0~ubuntu-focal) ...

Setting up docker-ce (5:20.10.18~3-0~ubuntu-focal) ...

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /lib/systemd/system/docker.service.

Created symlink /etc/systemd/system/sockets.target.wants/docker.socket → /lib/systemd/system/docker.socket.

Setting up git (1:2.25.1-1ubuntu3.5) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for systemd (245.4-4ubuntu3.18) ...

root@k8smaster01:~#

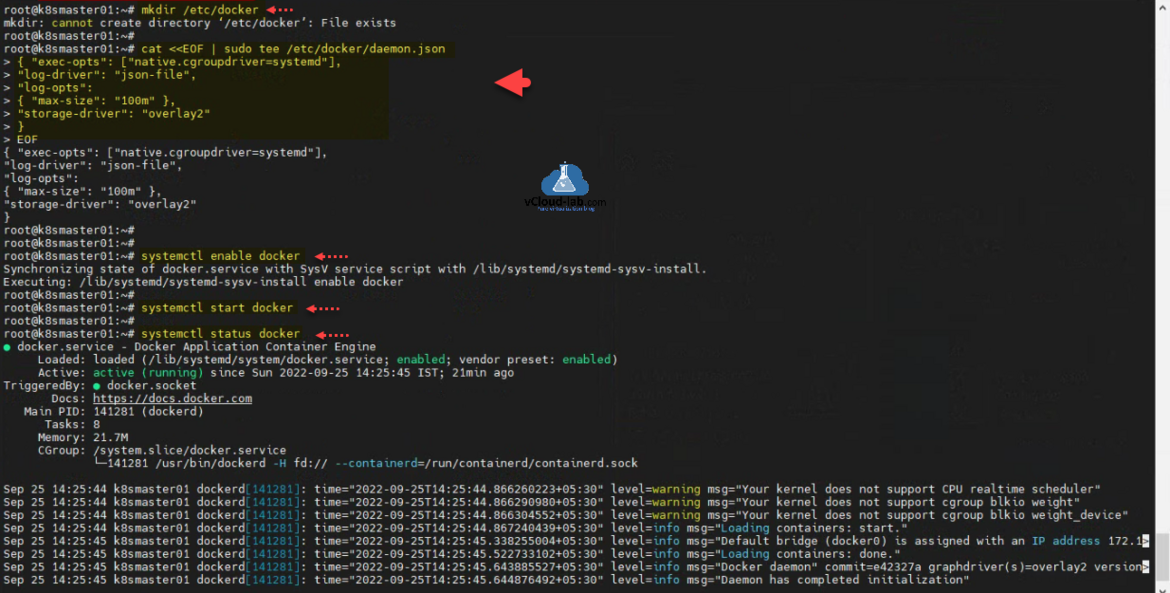

If folder does not exists /etc/docker create one with mkdir /etc/docker. Docker installs “cgroupfs” as the cgroup driver. Kubernetes recommends that Docker should run with “systemd” as the driver. Use below command to create daemon.json file, below is the configuration file.

cat <<EOF | sudo tee /etc/docker/daemon.json

{ "exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts":

{ "max-size": "100m" },

"storage-driver": "overlay2"

}

EOF

Command systemctl enable docker will make sure it auto start docker daemon after every reboot. Start docker service using systemctl restart docker and check status with systemctl status docker.

root@k8smaster01:~# mkdir /etc/docker mkdir: cannot create directory ‘/etc/docker’: File exists root@k8smaster01:~# root@k8smaster01:~# cat <<EOF | sudo tee /etc/docker/daemon.json > { "exec-opts": ["native.cgroupdriver=systemd"], > "log-driver": "json-file", > "log-opts": > { "max-size": "100m" }, > "storage-driver": "overlay2" > } > EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" } root@k8smaster01:~# root@k8smaster01:~# root@k8smaster01:~# systemctl enable docker Synchronizing state of docker.service with SysV service script with /lib/systemd/systemd-sysv-install. Executing: /lib/systemd/systemd-sysv-install enable docker root@k8smaster01:~# root@k8smaster01:~# systemctl start docker root@k8smaster01:~# root@k8smaster01:~# systemctl status docker ● docker.service - Docker Application Container Engine Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled) Active: active (running) since Sun 2022-09-25 14:25:45 IST; 21min ago TriggeredBy: ● docker.socket Docs: https://docs.docker.com Main PID: 141281 (dockerd) Tasks: 8 Memory: 21.7M CGroup: /system.slice/docker.service └─141281 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock Sep 25 14:25:44 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:44.866260223+05:30" level=warning msg="Your kernel does not support CPU realtime scheduler" Sep 25 14:25:44 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:44.866290980+05:30" level=warning msg="Your kernel does not support cgroup blkio weight" Sep 25 14:25:44 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:44.866304552+05:30" level=warning msg="Your kernel does not support cgroup blkio weight_device" Sep 25 14:25:44 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:44.867240439+05:30" level=info msg="Loading containers: start." Sep 25 14:25:45 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:45.338255004+05:30" level=info msg="Default bridge (docker0) is assigned with an IP address 172.1> Sep 25 14:25:45 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:45.522733102+05:30" level=info msg="Loading containers: done." Sep 25 14:25:45 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:45.643885527+05:30" level=info msg="Docker daemon" commit=e42327a graphdriver(s)=overlay2 version> Sep 25 14:25:45 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:45.644876492+05:30" level=info msg="Daemon has completed initialization" Sep 25 14:25:45 k8smaster01 systemd[1]: Started Docker Application Container Engine. Sep 25 14:25:45 k8smaster01 dockerd[141281]: time="2022-09-25T14:25:45.687264393+05:30" level=info msg="API listen on /run/docker.sock" root@k8smaster01:~#

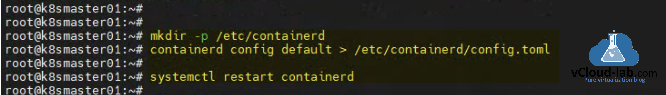

Create a folder /etc/containerd with mkdir -p /etc/containerd. Configure containerd service and restart it with containerd config default > /etc/containerd/config.toml and restart deamon systemctl restart containerd.

root@k8smaster01:~# root@k8smaster01:~# mkdir -p /etc/containerd root@k8smaster01:~# containerd config default > /etc/containerd/config.toml root@k8smaster01:~# root@k8smaster01:~# systemctl restart containerd root@k8smaster01:~#

Platform is ready to deploy kubernetes cluster with the command kubeadm init --pod-network-cidr=10.244.0.0/16. This will initialize the first kubernetes master in the cluster. Note down kubernetes join command which I will use in next article to join kubernetes worker node to master in the cluster.

root@k8smaster01:~# root@k8smaster01:~# kubeadm init --pod-network-cidr=10.244.0.0/16 [init] Using Kubernetes version: v1.25.2 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8smaster01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.34.61] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8smaster01 localhost] and IPs [192.168.34.61 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8smaster01 localhost] and IPs [192.168.34.61 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 14.004808 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8smaster01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node k8smaster01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: pryngq.9uyua66107320l6a [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.34.61:6443 --token pryngq.9uyua66107320l6a \ --discovery-token-ca-cert-hash sha256:1a7ce7f1bbbdac289749e0b4c62c6bba3eae98f9678fa710a97e96e26b4d8d92

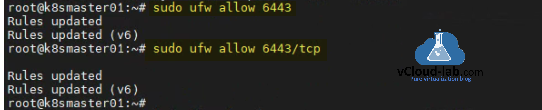

root@k8smaster01:~# sudo ufw allow 6443 Rules updated Rules updated (v6) root@k8smaster01:~# sudo ufw allow 6443/tcp Rules updated Rules updated (v6) root@k8smaster01:~#

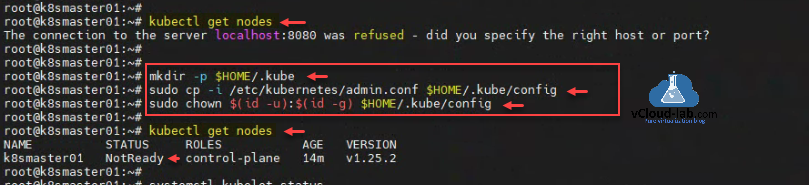

Kubernetes cluster with first master node and control-plane is installed successfully. Use kubectl get nodes to connect k8s cluster and get the nodes list. It will error as I haven't setup kubeconfig file to connect to kubernetes cluster yet. Use the command as mentioned after running kubeadm init command to setup kubeconfig file

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Verify nodes, I am able to connect it successfully. There is only one node with control-plane not ready status.

root@k8smaster01:~# root@k8smaster01:~# kubectl get nodes The connection to the server localhost:8080 was refused - did you specify the right host or port? root@k8smaster01:~# root@k8smaster01:~# mkdir -p $HOME/.kube root@k8smaster01:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config root@k8smaster01:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config root@k8smaster01:~# root@k8smaster01:~# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster01 NotReady control-plane 14m v1.25.2 root@k8smaster01:~#

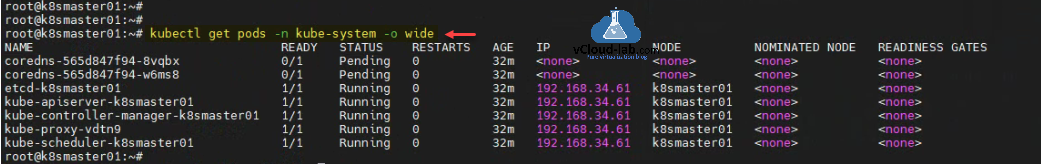

Check the pods status inside kube-system with command kubectl get pods -n kube-system -o wide. There is coredns pods which are in pending status, it need to be fix.

root@k8smaster01:~# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-565d847f94-8vqbx 0/1 Pending 0 32m <none> <none> <none> <none>

coredns-565d847f94-w6ms8 0/1 Pending 0 32m <none> <none> <none> <none>

etcd-k8smaster01 1/1 Running 0 32m 192.168.34.61 k8smaster01 <none> <none>

kube-apiserver-k8smaster01 1/1 Running 0 32m 192.168.34.61 k8smaster01 <none> <none>

kube-controller-manager-k8smaster01 1/1 Running 0 32m 192.168.34.61 k8smaster01 <none> <none>

kube-proxy-vdtn9 1/1 Running 0 32m 192.168.34.61 k8smaster01 <none> <none>

kube-scheduler-k8smaster01 1/1 Running 0 32m 192.168.34.61 k8smaster01 <none> <none>

root@k8smaster01:~#

You must deploy a Container Network Interface (CNI) based Pod network add-on so that your Pods can communicate with each other. Cluster DNS (CoreDNS) will not start up before a network is installed. Also there won't be pod communication working until you have networking in place.

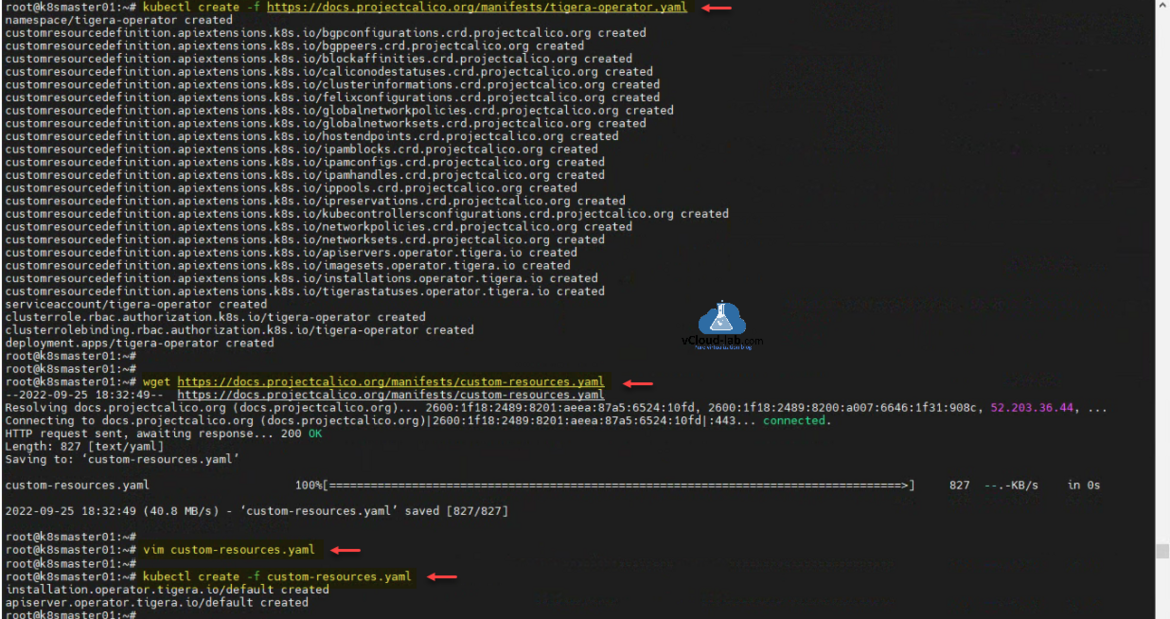

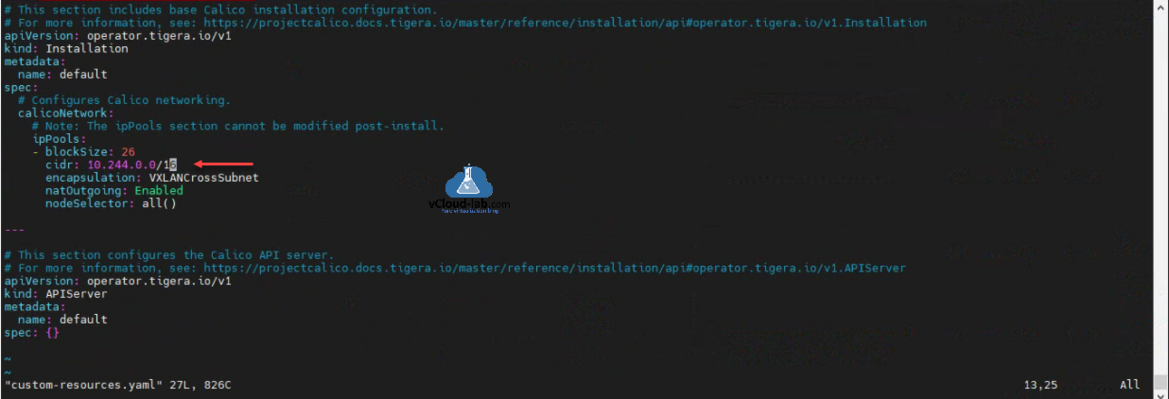

For this I am using project calico networking cni plugin. Use command kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml. Next download one more k8s manifest using command wget https://docs.projectcalico.org/manifests/custom-resources.yaml. Edit file with command vim custom-resources.yaml. change the section cidr with the earlier used IP block while installing kubernetes cluster using kubeadm init - 10.244.0.0/16. Once the file is edited create resources on k8s cluster using kubectl create -f custom-resources.yaml.

root@k8smaster01:~# root@k8smaster01:~# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml namespace/kube-flannel created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created root@k8smaster01:~# root@k8smaster01:~# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster01 Ready control-plane 10m v1.25.2 root@k8smaster01:~# root@k8smaster01:~# kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml namespace/tigera-operator created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/apiservers.operator.tigera.io created customresourcedefinition.apiextensions.k8s.io/imagesets.operator.tigera.io created customresourcedefinition.apiextensions.k8s.io/installations.operator.tigera.io created customresourcedefinition.apiextensions.k8s.io/tigerastatuses.operator.tigera.io created serviceaccount/tigera-operator created clusterrole.rbac.authorization.k8s.io/tigera-operator created clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created deployment.apps/tigera-operator created root@k8smaster01:~# root@k8smaster01:~# wget https://docs.projectcalico.org/manifests/custom-resources.yaml --2022-09-25 18:32:49-- https://docs.projectcalico.org/manifests/custom-resources.yaml Resolving docs.projectcalico.org (docs.projectcalico.org)... 2600:1f18:2489:8201:aeea:87a5:6524:10fd, 2600:1f18:2489:8200:a007:6646:1f31:908c, 52.203.36.44, ... Connecting to docs.projectcalico.org (docs.projectcalico.org)|2600:1f18:2489:8201:aeea:87a5:6524:10fd|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 827 [text/yaml] Saving to: ‘custom-resources.yaml’ custom-resources.yaml 100%[===================================================================================>] 827 --.-KB/s in 0s 2022-09-25 18:32:49 (40.8 MB/s) - ‘custom-resources.yaml’ saved [827/827] root@k8smaster01:~# root@k8smaster01:~# vim custom-resources.yaml root@k8smaster01:~# root@k8smaster01:~# kubectl create -f custom-resources.yaml installation.operator.tigera.io/default created apiserver.operator.tigera.io/default created root@k8smaster01:~#

This is for your reference, this is the content of custom-resources.yaml and where you need to make the cidr changes.

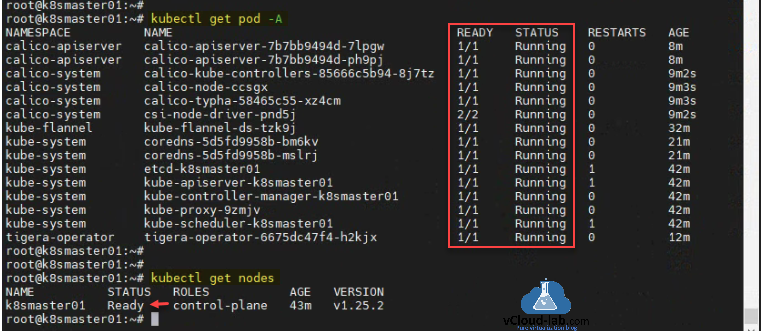

This is the last step to verify pods in the cluster with command kubectl get pod -A. I see few networking pods are deployed successfully, everything is ready and in running status. CoreDNS pods are running. Check the nodes status with kubectl get nodes. Node is in ready status.

root@k8smaster01:~# root@k8smaster01:~# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE calico-apiserver calico-apiserver-7b7bb9494d-7lpgw 1/1 Running 0 8m calico-apiserver calico-apiserver-7b7bb9494d-ph9pj 1/1 Running 0 8m calico-system calico-kube-controllers-85666c5b94-8j7tz 1/1 Running 0 9m2s calico-system calico-node-ccsgx 1/1 Running 0 9m3s calico-system calico-typha-58465c55-xz4cm 1/1 Running 0 9m3s calico-system csi-node-driver-pnd5j 2/2 Running 0 9m2s kube-flannel kube-flannel-ds-tzk9j 1/1 Running 0 32m kube-system coredns-5d5fd9958b-bm6kv 1/1 Running 0 21m kube-system coredns-5d5fd9958b-mslrj 1/1 Running 0 21m kube-system etcd-k8smaster01 1/1 Running 1 42m kube-system kube-apiserver-k8smaster01 1/1 Running 1 42m kube-system kube-controller-manager-k8smaster01 1/1 Running 0 42m kube-system kube-proxy-9zmjv 1/1 Running 0 42m kube-system kube-scheduler-k8smaster01 1/1 Running 1 42m tigera-operator tigera-operator-6675dc47f4-h2kjx 1/1 Running 0 12m root@k8smaster01:~# root@k8smaster01:~# root@k8smaster01:~# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster01 Ready control-plane 43m v1.25.2

In the next article I will join Kubernetes worker node into this master node. How to install kubernetes worker node on ubuntu Part 2

Useful Articles

ansible create an array with set_fact

Ansible get information from esxi advanced settings nested dictionary with unique keynames

Install Ansible AWX Tower on Ubuntu Linux

Ansible AWX installation error Cannot have both the docker-py and docker python modules

Ansible AWX installation error docker-compose run --rm --service-ports task awx-manage migrate --no-input

docker: Got permission denied while trying to connect to the Docker daemon socket

Ansible AWX Tower create Manual SCM (Source Control Credential Type) project

Reset Ansible AWX Tower admin password

Install Ansible AWX on Microsoft Windows OS

Step by Step Install Ansible on Ubuntu OS

Install Ansible AWX Tower on Ubuntu Linux OS

Ansible AWX Tower Github inventory integration | Github inventory source